With a background in creating visual displays for the military since 1966 and extensive research in the field of visual perception, Thomas Furness III had the experience the Air Force needed. After years of fighting for funding, Furness finally got the go-ahead to prototype a state-of-the-art control system at Wright-Patterson Air Force Base in Ohio. In 1982, he demonstrated a working model of the Visually Coupled Airborne Systems Simulator or VCASS. It resembled Darth Vader’s helmet in the movie Star Wars. Test pilots wore the oversized helmet and sat in a cockpit mockup.

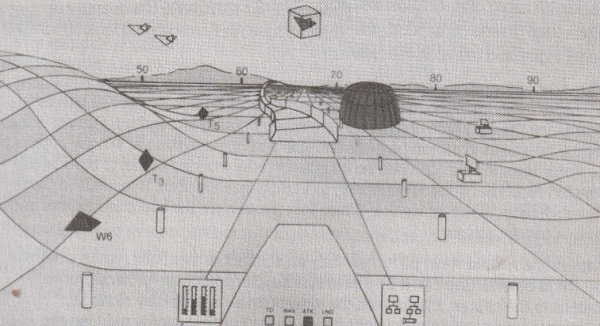

Instead of a normal view, pilots saw only an abstract or symbolic representation of the world outside. These synthetic images were projected onto screens in the helmet, masking off the view outside. Seeing only symbolic representations of landmarks, flight paths, and potential hazards reduced the distraction caused by an overload of visual information. The reason for this is symbolic information on the map is much easier to decipher than detailed aerial pictures. Information is reduced to the minimum that’s necessary for achieving a goal, navigation in this case.

The VCASS system used the worlds first 6D position and orientation tracker from a company called Polhemus to monitor where the pilot was looking. Electromagnetic pulses, generated by a source, were picked up by a small sensor mounted on the helmet.

Processing the signal yielded an accurate reading of the current position and orientation of the helmet. Using this information, the computer rendered the appropriate view. By obscuring images from outside the cockpit, immersion in a symbolic world was achieved.

Technology and funding allowed Furness to render more complex images than Sutherland’s simple wire-frame cubes. In addition, custom 1-inch diameter CRTS with 2000 scan lines projected the images, almost four times the resolution of a typical TV set at that time. Virtual environments could be viewed in much more detail than anything commercially available at that time. The Air Force saw enough promise in VCASS that a second phase was funded, known as Super Cockpit.

By rethinking the entire process of flying a jet fighter, Furness created a powerful yet natural method of interacting and controlling an aircraft’s complex machinery. His tools were the computer and the head-mounted display. They allowed him to construct a virtual world that gathered the storm of navigation, radar, weapons, and flight-control data into a single manageable form. Symbolic representations of the outside world and sensor data provided a filtered view of reality, thus simplifying the operation of the aircraft.

VCASS, one of the most advanced simulators developed, demonstrated the effectiveness of virtual reality techniques. Furness joined Engelbart, Sutherland, and Krueger in tearing down the barrier separating computers from their users. Removing this barrier allowed pilots to enter a strange new world carved out of the silicon of the computer. Radar became their eyes and ears, servos and electromechanical linkages their muscles, and the plane obeyed their spoken commands. Flying would never be the same.

So far, researchers probing the man-machine interface had focused primarily on the simulation of sights and sounds. From Sutherland’s initial experiments with head-mounted displays, Krueger’s playful interaction using video cameras and projection systems, and Furness’s integrated HMD and 3-D sound environment, all three researchers explored the visual and audio dimensions of the human perceptual system. Not since Heilig and his Sensorama had anyone focused much attention on recreating tactile or force-feedback cues.

Even without sight, our sense of touch allows us to construct a detailed model of the world around us. The roughness of concrete, the smoothness of glass and the pliability of rubber communicate detailed cues about our environment. Theoretically, computers could create a virtual world using tactile feedback just as they had with sights and sounds. Frederick Brooks, at the University of North Carolina (UNC) set out to chart this unexplored territory.

Ivan Sutherland’s vision, defined in his 1965 paper “The Ultimate Display,” became the basis for Brooks’ research in the early 1970s. Realizing that little work was being done with force or tactile feedback, he decided to pursue combining computer graphics to force feedback devices. He not only agreed with Sutherland and Engelbart that computers could be mind- or intelligence-amplification devices; he set out to show how it could be done.

One early project by Brooks, called GROPE-II, proposed a unique molecular docking tool for chemists. Detecting allowable and forbidden docking sites between a drug and a protein or nucleic acid is crucial in designing effective drugs. Because both drug and nucleic acid molecules are complex 3-D structures, locating effective docking sites daunting task.

Imagine two complex tinker-toy structures two to three feet high. Your job is to manipulate each model, examining how well a knob or groove on one model docks with those on another. There might be hundreds of potential sites to test. Brooks wanted to create a tool capable of simulating the physical feel of docking a molecule. He reasoned that chemists would be much more effective if they could get their hands on a molecule and actually feel the tug and pull of docking forces.

Tinker-toy representations of two molecules could be rendered by the computer. Chemists could then use a special device to grab one of the molecules and attempt to dock it with the other. An Argonne remote manipulator (ARM) was modified for this purpose. Originally used for handling radioactive materials, they were operated by a mechanical hand-grip that controlled a remote robotic arm.

UNC acquired a surplus ARM and modified it for their purposes by adding extra motors to resist the motion of moving the hand-grip. Controlled by a computer, the ARM exerted physical force against the operator’s hand.

After assembling a system in 1971, Brooks and his students were disappointed to learn that available computer technology wasn’t up to the task. They couldn’t simulate anything more complex than simple building blocks, let alone the intricacies of molecular docking. So the GROPE-II system was moved into storage, keeping a lonely vigil until the arrival of more powerful computers. Sadly that wasn’t going to happen for another 15 years!