There is no denying it. Virtual reality is a breakthrough technology that allows you to step through the computer screen into a 3-D artificial world. You can look around, move around, and interact within computer worlds every bit as fantastic as the wonderland Alice found down the rabbit hole. All you have to do is put on a headset and then almost anything is possible—you can fly, visit exotic lands, play with molecules, swim through the stock market, or paint with 3-D sound and colour.

While VR has generated a lot of excitement in recent times few people have actually tried VR and even fewer an Augmented Reality or Mixed Reality headset. Despite all the attention companies like Facebook (Meta) have thrown behind it. But to know where we are going we must look to the past and ask ourselves exactly where VR come from? Who first thought it up and how is it accomplished? This is the history of VR from its humble origins in 1931 to 2021 and beyond. So we start with the big fundamental question…what is VR?

The truth is virtual reality isn’t a single technology, but rather a collection of different technologies…

1. Real-time 3D computer graphics

2. Wide-angle stereoscopic displays

3. Viewer (head) tracking

4. Hand and gesture tracking

5. Binaural sound

6. Haptic feedback

7. Voice input/output

Of these, the first three are mandatory, the fourth is conventionally used but under some circumstances may not be necessary for all apps and experiences and items five, six and seven do little more than increase the levels of immersion you get while inside VR, but again, none of them is essentially needed.

Real-time 3D computer graphics

So let us start with those Real-time 3D computer graphics because almost all VR and indeed AR headsets before 2012 were PC powered. The generation of an image from a computer model, image synthesis, is well understood these days and given sufficient time, we can generate a reasonably accurate portrayal of what that object would look like under given lighting conditions. However, the constraint of real-time means we have anything but time. The main lesson VR has learned from flight simulators is that update rate is extremely important.

We do not want to reduce the number of image updates to less than 30 times per second because people will often suffer from nausea as well as it breaking any levels of immersion the person is having. While most VR headsets these days talk about Hz (refresh rate) it does not affect FPS (frame rate) because Hz is your headsets maximum refresh rate and FPS is the number of frames the tech generating those images can handle. While these are separate things most headsets these days run at 60-90 framers per second.

This demand constrains both the quality we can expect and the geometric complexity of our virtual worlds. Modern home graphics cards like the RTX 3090 can render independent facets (triangles) in the billions along with a host of other software and hardware trickery that can make a virtual world seem pretty much life-like. While all-in-one headsets like the Oculus Quest 2 only have mobile graphics processors that number is still in the hundreds of millions in terms of independent facets (triangles). So in short, the good news is computer graphics are above and beyond where they need to be in order to produce a realistic metaverse. So we move onto a technology that is a lot older than computer graphics but still just as important…

Wide Angle Stereoscopic Displays

While not everyone does, most people have stereoscopic, or binocular, vision – it is a natural consequence of having two coordinated eyes. Each eye sees the same world around us but from a slightly different position. The resulting different images are then fused by the brain to give the impression of a single view, but with auxiliary depth information. We also have other visual cues like focus which augment our depth perception.

Binocular vision is also fundamental to VR. Without it, we would not have sufficient depth cues to work in the synthetic environment nor would it look as real as the real world. This stereoscopic effect is achieved by placing independently driven displays in front of each eye. The image on each display is computed from a viewpoint appropriate for that eye. In the same way as your normal vision, the brain fuses these images together and derives depth information.

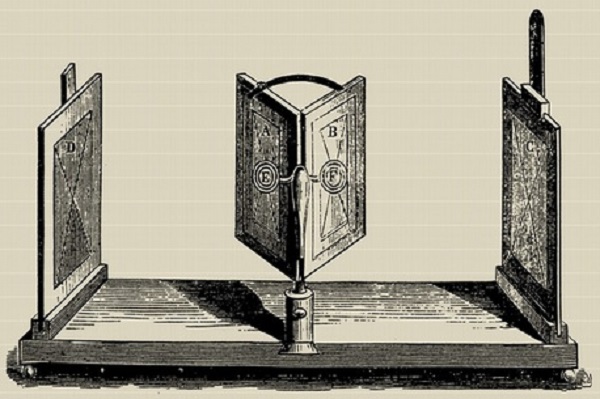

According to Wikipedia the earliest known stereoscope was invented by Sir Charles Wheatstone. The Wheatstone Stereoscope was seen in 1823, in this presentation he used a pair of mirrors at 45-degree angles to the user’s eyes, each reflecting a picture located off to the side. This was then followed by the Brewster Stereoscope in 1849, then the Holmes Stereoscope in 1861

These stereoscopic devices then went on to become toys in the form of The View-Master in 1939. This trademarked line of special-format stereoscopes was used with View-Master “reels” (which you could buy in separate themed packs). They were thin cardboard disks containing seven stereoscopic 3-D pairs of small transparent colour photographs on film. In fact, the View-Master brand would come around again post 2021 with the pretty awesome Mattel View-Master VR Headset that was a mobile VR headset powered by a smartphone.

Head and Eye Tracking

The synthetic environment that the VR user enters is defined with respect to a global zero-point (origin) and has built-in conventions for directions (axes). The objects in this environment are defined with respect to this global coordinate system and much like these objects, the user has a position and orientation with respect to the same coordinate system. What the user can see in that world depends on the viewing frustum — the sub-volume of space defined by the user’s position, head orientation and field of view. This is similar to a movie camera but differs from the human visual system in that we can also move our eyes independent of our head orientation.

This is often broken into two different levels of tracking. 3 DoF (which stands for degrees of freedom) means the 3 axes which an object can be rotated about are tracked. Most mobile VR headsets and some standalone VR headsets like the Oculus Go are 3 DoF, whereas most modern VR headsets are 6DoF (considered the way only way forward by most). This of course allows for the position of the headset to be tracked, as well as the orientation of the headset giving the true illusion that you are standing in a real-world environment, all be it still virtual.

All other tech and software relating to VR only has the task of making the virtual world seem more real, more immersive. These days with feedback vests, hand tracking, motion trackers, full-body trackers and even eye movement tracking a virtual world can feel very real indeed. And that is what VR is in a nutshell! For the next part, we start where most other articles start and that is with Morton Heilig’s Sensorama theatre.